It's that time of year again when many online services and platforms compile personalised annual reviews for their users. Thanks to the wealth of collected data, user behaviour is often well-analysed and beautifully presented.

Depending on the platform, these yearly reports vary greatly: some focus on a few key points and data sets, while others enrich the data with graphs and visualisations.

I've always found the idea of these annual reviews interesting, but I've never been fully satisfied with the results. Sometimes I wished for a deeper analysis of a particular topic; other times, I missed an informative visualisation. Additionally, the formats of these reviews often change, making it difficult to compare data over multiple years.

Another issue is that these summaries are always created and hosted by the platform provider, meaning they could be taken offline at any time for any reason.

So, I thought:

Why not build my own year in review page? How hard can it be? After all, many online services and platforms provide access to their data via APIs.

I decided to create a personalised annual review, focusing on the data and analyses that interest me. The review would be hosted entirely on my website with no dependencies on third-party providers, giving me full control over its appearance and content.

The Result

The finished product is available on my Year in Review Page. I've divided the data into topics that interest me. Depending on the quantity and format of the data I an get access to, I created more or fewer visualisations for each topic.

Here are the platforms and services I was able to include in my automated annual report:

- My outings, bike rides, and hikes published on Komoot

- My check-ins via Swarm (formerly Foursquare)

- My posts on Mastodon and Pixelfed

- My public coding activity on GitHub

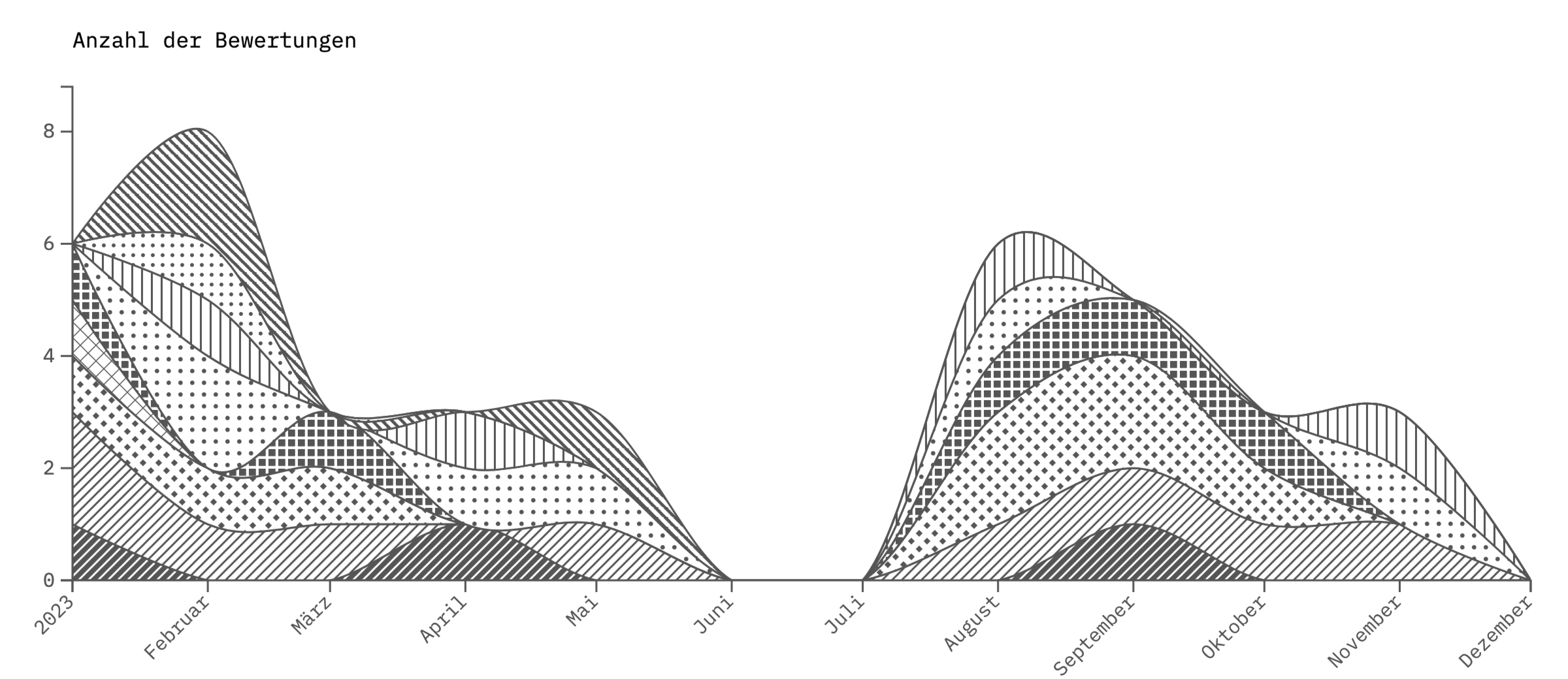

- My film ratings on Letterboxd

- My music listening habits on Last.fm

- My use of public transport on Traewelling

- My video game habits

- My matches in PUBG: Battlegrounds

Technical Overview

In the following, I'm going to explain the technology I use to create my annual review, explain how I obtain the data from individual services and describe how I created the associated data visualisations.

A: Collecting Data

Before displaying the data, I need to fetch it from the respective online services. The process varies depending on the platform.

I wrote a script that runs automatically once a day, storing the retrieved data in my own database.

I chose SQLite as the database, as it is widely supported by many programming languages and behaves like a file that I can easily copy. This is particularly handy for debugging. Another advantage of SQLite is that I don't need to set up, run, or maintain a separate server.

For the data collection script, I opted for Node.js. JavaScript's flexibility and dynamic typing is great for handling the varied and sometimes inconsistent response formats of online services. Additionally, Node.js supports asynchronous operations using Promises and async/await, which is extremely helpful when communicating with multiple servers simultaneously.

Here’s a brief list of the steps required to collect data from each platform:

- Pixelfed Complexity:

(1/10): Pixelfed provides a public feed for each user. It’s easy to parse and contains all the relevant information. - Mastodon Complexity:

(1/10): Mastodon offers users’ public timelines in JSON format, which includes all the details I needed. - PUBG: Battlegrounds Complexity:

(2/10): PUBG provides match data via an API, and requires an API key. Due to the API structure, I have to send five sequential requests per match to retrieve the information I'm interested in. - Steam Complexity:

(2/10): Steam offers a lot of profile information in XML format. However, the API is poorly documented, and the URL parameters don't work for all titles. - Traewelling Complexity:

(2/10): Traewelling provides user feeds as JSON, with GeoJSON files for geodata accessible via simple URLs. However, their API has changed frequently, and I’ve occasionally had to extract data from HTML pages instead. - GitHub Complexity:

(3/10): GitHub provides public activity via an Atom feed. However, format changes over the years have required additional parsing logic for accurate analysis. - Letterboxd Complexity:

(4/10): Letterboxd provides a feed of user ratings, but additional details about the films (e.g., duration or cast) have to be fetched from the Open Movie Database API, which requires an API key. - Last.fm Complexity:

(4/10): Last.fm’s API provides track data (API key required), but details like track duration or genre need to be supplemented via the MusicBrainz API. - Foursquare/Swarm Complexity:

(5/10): The Foursquare Node API lacks access to the data I needed, so I created a custom PHP server to fetch and store the relevant data. - Komoot: Complexity:

(9/10): Komoot unfortunately does not provide a public API for mere mortals (there seems to be an API for business customers). Accordingly, all the data relevant to me has to be extracted from the public website. Access to my data is also restricted: for some time now, Komoot has only provided three photos per entry. If I want to have all the pictures for an entry, I have to log in to the website and download them manually. Komoot provides me with information on the type of sport, the duration and altitude metres as well as the geodata for the route. I receive weather information for a Komoot entry via a request to the API of Pirate Weather (API key required). I can obtain information about the roads travelled on and the surface by sending a request to GeoAPIfy (API key required). I get information about the areas, places and countries crossed from OpenStreetMap, more precisely from the Overpass API. This also kindly returns the Wikipedia entries for the relevant areas. By querying the Wikidata-API, I finally get the city coats of arms and the description text of the areas.

I think the list shows very nicely how open source projects (such as Traewelling, Pixelfed and Mastodon) are often ahead when it comes to machine-readable access to your own user data.

I use the CMS Kirby for my website, which is written in PHP. Accordingly, I wrote some PHP code for analysis and data display.

Additionally, many SQL queries are used for data analysis, some of which turned out to be more complex than expected.

For visualisation, I primarily relied on two JavaScript libraries:

On the one hand, I use d3 to generate all graph representations. It is very flexible and offers support for a huge number of different graph types and visualisation types. There are a lot of free learning resources available for d3, and plenty of examples, so I found it very easy to create the different visualisations here.

For the map visualisations, I opted for the maplibre-gl library. It offers a wealth of functions while at the same time allowing me to make many of the decisions concerning the map visualisations myself.

As I had set myself the goal of hosting all the data relating to the annual review myself, the usual map providers such as Google Maps or MapBox were ruled out for me. Instead, I decided to use Protomaps. This data format makes it possible to download map data relatively easily and then host it yourself, while also offering the option of customising the appearance of the maps.

I have slightly adapted the source code of the Protomaps pmtiles library to make it possible to display 3D reliefs on the map (this looks particularly impressive on tours in mountainous regions).

Another library that helps a lot when processing geodata is turf.js: It's a great tool for working with GeoJSON, and makes it very easy to calculate areas and distances, for example, or to find intersections of areas.

Insights and Surprises

Some key takeaways from this project:

- The Overpass API for OpenStreetMap is challenging to use but offers unexpected and invaluable information, such as links to Wikimedia API data.

- GeoJSON proved to be a great format for geodata, thanks to its clarity and wide support across programming languages.

- SQL queries can quickly become complex, even for simple visualisations.

- Using

Web Workersfor background data processing improved browser performance significantly.

Favourite Feature

My favourite feature is the visualisation of all my travelled routes. It clearly outlines my personal cycling network. And also because the most effort has gone into this presentation and I have learnt a lot working on it.

Conclusion

This project not only gave me a comprehensive annual review tailored to my interests but also taught me a lot about API data collection, processing, and visualisation techniques. Hosting all underlying data locally ensures I maintain full control over its content and presentation.

Leave a comment

Replied on your own website? Send a Webmention!